In this episode of In-Ear Insights, listen as CEO Katie Robbert and Christopher Penn discuss the ins and outs of data science, answering questions such as:

- What is data science?

- What does a data scientist do?

- What isn’t data science?

- What’s the difference between data analytics and data science?

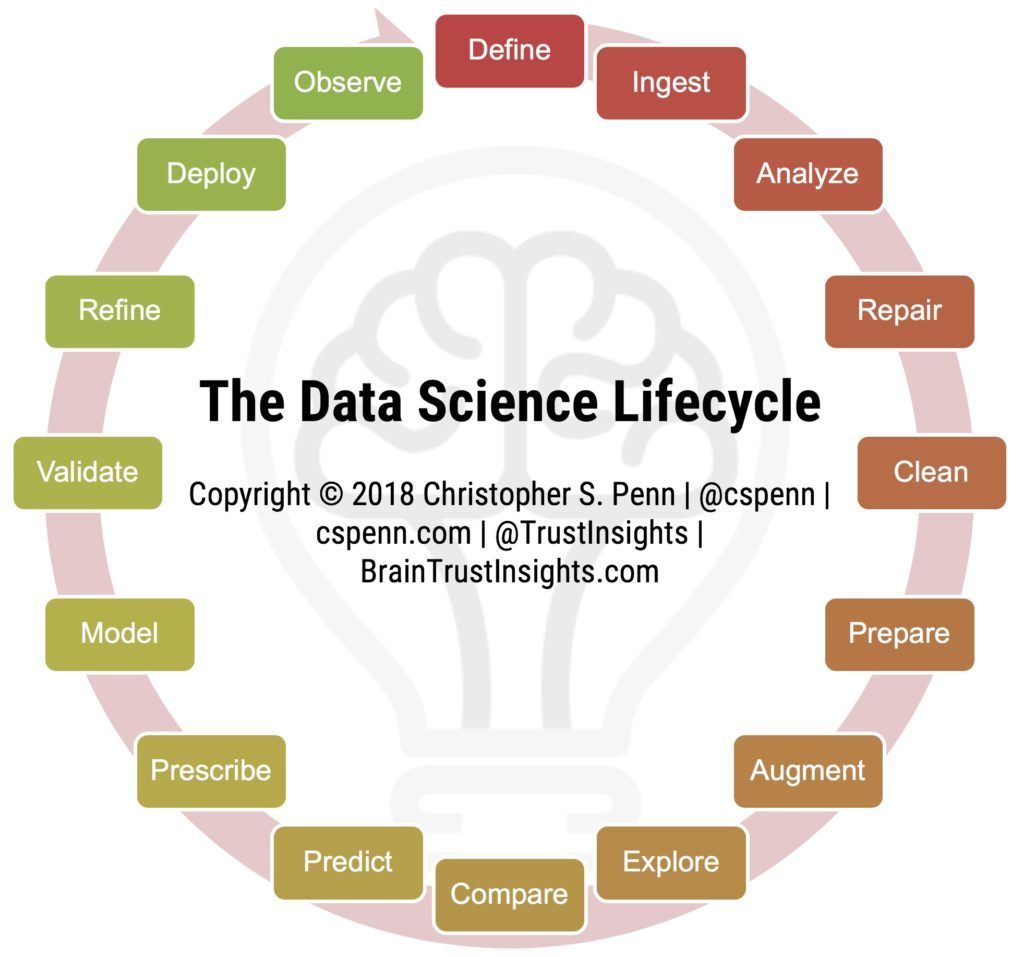

- The data science lifecycle

Discussed in this episode is the Trust Insights Data Science Lifecycle.

Download your copy of the lifecycle from our Instant Insights catalog here.

Machine-Generated Transcript

What follows is an AI-generated transcript. The transcript may contain errors and is not a substitute for listening to the episode.

Christopher Penn

Alright, so today we’re going to talk about data science. One of the things that that a lot of folks have googling for things like what our data scientists, what is it a design is what is what do data scientists do and stuff and started figured out and actually asked you for your perspective, because, as someone, you know, your background is more on management and medical and healthcare and, and governance system as opposed to a data science self. So from your perspective, what is data science mean to you?

Katie Robbert

Um, you know, that’s an interesting question. Because when you hear the word science, you automatically think, scientist, and you automatically assume that a data scientist has to have a PhD. So I am, I’m definitely in that pool of people who have often misunderstood what a data scientist actually does. And so these days, my understanding is that a data scientist is somebody who is deep into analytics and AI, and machine learning, and isn’t just someone who can execute, but as someone who can actually create the new thing, so an innovator, a developer, a programmer, and it, you know, it’s a unique set of skills, and it’s, it’s tough to come by. So that’s sort of my high level understanding of a data scientist is someone who can execute. But beyond that is really sort of the Creator and the Innovator of the new thing.

Christopher Penn

And interesting because that that’s what I think a lot of people think of as data science. But data science, it’s almost in some ways, I think, probably more confusing than it is helpful, because it’s just using science and the scientific method with data on data and things. So for those who who don’t remember the good old high school lessons on on the scientific method, it’s, it’s ask a question, define your variables, create a hypothesis test, gather your data, analyze it, refine the hypothesis, and then either discard or, or build a, a theory out of it, and then observe to see if in the real world, it’s true. And that’s where I think a lot of people go wrong, is they believe that a data scientist is a is a production role. And I think that’s an important distinction. a data scientist by definition, if you’re using the scientific method, you’re not, you’re not building production code and production stuff you are exploring, you are an explorer, whereas someone who’s like a data analyst, or a business intelligence systems administrator, I think, would be more the person who would take those findings and turn them into something in production. Like when you work with developers, how do you differentiate between the person experimenting with code and the person writing production code? Hmm, that’s, you

Katie Robbert

know, what, as you’re saying that, I was like, oh, like, my light bulb went off. And it was a, that’s a really great way to think about it. So in my experience, what we had in the development world was you had the product architect, who was the person I guess, in this scenario, who would be equivalent to the data scientist of they’re sort of mapping things out and testing things and saying, Well, what is this button do? And what if I put this over here. So they’re really sort of creating that ecosystem of, you know, the end product to be created, but it’s not production ready. And then they hand off those ideas and thoughts to the engineers and developers to say, Okay, this is what I think we should do, let’s run with it. And the engineers and developers are the ones who would execute what the product architect had come up with. So I think that’s a really great analogy.

Christopher Penn

Do engineers ever do any large scale experimentation? Like, oh, let’s try it. So what’s what’s, you know, that blue upper?

Katie Robbert

Um, well, I I think it’s kind of unfair, because that’s all they do that that’s all they would prefer to do versus do the actual thing that they’ve been tasked with? So, so yes, the short answer is, yes, of course they do. Um, but no, I think that engineers Absolutely, I think that all levels of developer, programmer engineer, whatever you want to call them have that curiosity and want to do

that kind of experimentation. But I think, you know, the point that you’re making is to truly be that product architect, or in this example, this data scientist, you need to be able to follow the scientific method start to finish, you know, and not just blow things up, like anybody can push a button and say, What is this button do and blow it up. But someone who has more training and more skills and more insight and understanding and experience will be able to say, okay, that didn’t work, how do I is just to get closer to the result that I’m after?

Christopher Penn

Right? So in the in the software development lifecycle, what’s the general process to go from, hey, I have an idea for an app for this to to, hey, here’s a, a working product. what’s what’s the general process to to get to that

Katie Robbert

the general process, it almost always starts with a business case or requirements. So what’s the problem you’re trying to solve? So what’s your hypothesis? What are you trying to prove out? And then you go from requirements to if you depending on what the thing is, you might need some upfront wire frames to figure out what is this thing that look like, that’s not necessarily applicable in analytics, but it’s applicable in the sense that you need to figure out how is someone going to digest this thing? So you’re building towards that model, even if it’s not the exact thing? So you define it, you sketch it out, and then you start, do you, you pull together all of your requirements to say, What do I need to do this thing, all of your dependencies, and then you start to build it and experiment with the goal of refining and iterate and sort of, you know, that testing the hypothesis,

Christopher Penn

okay, one of the things you’ll find out the brain trust insights website into our instant insights is the the data life science lifecycle. And the process is probably pretty similar in a lot of ways, because we’re, we start with defining what the, the goal is, what’s the, what is it we’re trying to prove, or disprove we’re trying to figure out, and then there’s a whole series of about six steps where we bring in data, either internal or external, both, you analyze the data for its integrity. So is there stuff missing? Is their stuff corrupted? Is their stuff? Is it usable? And then you have three phases of preparation, where you repair the data, the data is broken, you clean the data, so you remove or impute missing data, and then you prepare it, you should structure it to be actually usable. And then after that is augmentation, will you bring in additional data? If you find out what you have isn’t enough, you were doing some some work recently with a podcast reporting system, and the data is clean. And it’s it’s not missing anything, but it is in no way prepare for usage? No,

Katie Robbert

not at all. And even after it was prepared for consumption, there were still questions of what does this mean? Because it just wasn’t intuitive. So it was a there was a quite a few rounds of iteration of cleaning it up.

Christopher Penn

Yep. And most the folks will you in the data science industry will be saying your preparation, which is these first really these first seven phases semi 80% of your time, which is depending on the data set. Yeah, it could even be higher, because go to 90%. After that you have exploration comparison, like what’s in the data from a once you know, it’s solid, a, you, you have to actually figure out what questions can you ask of the data, and then compare it to previous time periods, other data sets and things to understand it, and then you start the process of creating the actual model. And this is where you get to the rest of the scientific method of prediction is nothing more than I have offices or prescription is, if the prediction is true, what should we do with the data, then we build a model, validate it, make sure it works, refine it, deploy it to production, and observe it now in in past clients and projects and things where have you personally seen things go most off the rails? You know,

Katie Robbert

it’s funny that you’re asking me because I was going to ask you the same question. It’s that it’s that front 80%. It’s that lack of planning. And I know that that’s something that we’ve touched upon in other discussions. But it really is that lack of planning. And that’s true of any process, whether it’s the data science lifecycle or the software development lifecycle, which is you could you could bucket those things into the planning, defining, designing, building, testing and deployment, it’s very similar, because the life cycles are to your point, all based off of the scientific method. But it’s that upfront planning portion where things go wrong, because it’s a lot easier to adjust a document than it is to adjust actual code is a lot less expensive, and it’s a lot less time consuming.

Christopher Penn

In terms of planning. What are some of the things you’ve seen people not planned for

Katie Robbert

different scenarios, different user scenarios? How is someone expected to use this thing? Or how is someone expected to understand this thing, a lot of times, what happens with the planning process is the person who’s planning is thinking about themselves, or they’re, they’re trying to put themselves in the shoes of the end user. And it’s not quite right. And it’s really important to talk to end users about what it is that they actually need, or what they’re expecting to get. Because, you know, if you said to me, you know, what kind of a widget do you want? I might say, um, I don’t know, just design one that works well, that’s pretty vague. And you’re going to design one that works for you, right? But it might not work for me. And I think that that’s where things often go wrong. Because you’ll go back and be like, well, you asked me to do this thing. And then it just wasn’t clearly defined. And then the end user isn’t happy with what they got. What if

Christopher Penn

there is no clear definition? So real, literally, real time case study, we are in the midst of pulling thousands of search terms for an upcoming healthcare conference that we’re going to be key noting. And the question was, how do we find how do we isolate and and understand the voice of the patient? What is it that the the patient is saying, and we know from the the wonderful book, everybody lies that people put things into Google that they would never ask another human being, because embarrassment is I think that and so Google Search data is actually one of the richest sources of data for healthcare information. But, you know, we’ve been through like, three or four cycles of commentary this morning from someone who’s, who’s not just pushing the buttons, but is actually trying to figure out this this project, where do you see how do you see this cycle being applied in this example?

Katie Robbert

Well, so you know, it’s interesting, that’s actually where agile fits in. Because agile is all about those iterations. And so you do a small chunk, test it and adjust and iterate. And so I think one of the things that agile does in this process is it It allows you to break it down into smaller pieces, test it, and then change direction if you need to. So that’s sort of what we’ve been doing this morning, versus you trying to define the whole thing all at once, and then put it in front of an end user and a half to change direction after you’ve already put days and hours and weeks of work into it, you did a little bit tested it adjusted a little bit, tested it, adjust it. And I think that that’s one of the things that’s really nice about how a very stringent process like a data science lifecycle fits so nicely with an iterative process like agile,

Christopher Penn

but when you have,

I guess, when you have something like this kind of project, where there isn’t necessarily a clear remit per se, other than find something interesting. How do you

how do you how do you make that more impactful, because it’s entirely possible that you could get to the exploratory database, you’ve done all the preparation and the date is in good condition. But then you’re like, but actually, this doesn’t say anything.

Katie Robbert

I think that’s always a risk when your requirements aren’t well defined. I mean, that’s exactly sort of what you’re describing. It’s the requirements aren’t well defined. We’re taking our best guess.

So what I’ve done in those instances where I don’t have defined requirements, your best bet is to keep asking questions and keep putting it in front of people. And keep saying, does this look right now, the does this look right is still pretty vague, because you might not be asking the right person or you might not be asking the right question. So a lot of that is where that intuition and instinct and experience comes in. So you put something in front of me this morning and said, Does this look right? And I said, it’s not quite there, try tweaking it this way, and started to get more towards what you were after with that patient voice. You know, but we’re only two people. So in those instances, you need a wider audience of a diverse group of people to really sort of understand. And again, it’s all about that user testing and validation.

Christopher Penn

Yeah, and it’s challenging, because if you don’t have the domain knowledge, you run the risk of creating stuff that people might not necessarily

a someone with domain knowledge will look at go. Um, Nope, that’s clearly wrong. And if you if it’s something you’re putting out publicly that that could go pretty quickly for you.

Katie Robbert

Yeah. And so what do you do in that situation? When you don’t necessarily have the domain knowledge?

Christopher Penn

I try to find somebody who does, it’s part of the benefit of having a large live literally a large social network is being able to ask somebody, Hey, can I borrow, you know, 15 minutes of your time to ask a question about something and see what they come up with? See if they come back and say, yeah, that’s, you know, you’re on the right path, or wow, you know, whatever gave you that idea.

Katie Robbert

I think that’s so important. And I don’t want to gloss over it. Because one of the things about being a data scientist or even sort of anybody in a position

of a higher level of a leadership position is being able to admit that you might not know everything, and being able to reach out to other people to say, Can you guys check this, it doesn’t mean that you’re any less intelligent about the thing you’re doing, it means that you have the self awareness to bring in other people who might know more than you about that thing. And I think that that, you know, in the data scientists realm is so important, because you can’t know everything, you can’t know everything about every subject, every industry, and then that’s sort of where you need to bring in those experts. And, you know, I just, I didn’t want us to miss the importance of that point. Yeah,

Christopher Penn

because it is, you can get a lot of people who can know a tiny bit about something and talk a really good game. But then once you get into the actual or if you like, wow, this person actually doesn’t know anything about this at all. Mm hmm.

Unknown

Okay. In terms of

Christopher Penn

data science, applications and things, it’s interesting, because data science can be applied, like the scientific method, in general, it can be applied to anything. And certainly, there’s no shortage of data, where do you see the risk of overwhelming somebody with with data, I mean, for example, as a data scientist, and, and I would argue it was kind of a, an odd one, because my background is not in statistics, it is very easy for me to back the truck up and just pour a bunch of stuff on somebody’s desk

don’t necessarily know that’s the best strategy for getting to for communicating something. So how do you how you manage someone like me, so that the end outcome is actually useful?

Unknown

That’s, that’s, that’s a big question.

Katie Robbert

Um, well, I mean, honestly, I break, I try to break it down really simple. And one of the gut checks that I often use with someone you know, of your skill set, who is, you know, so smart and just wants to give someone all of the data is, you know, what do I say to you all the time, if I can’t figure it out in 10 seconds, then it’s too complicated. And so that’s sort of always my very high level benchmark for the data that you’re putting in front of me, I might, you know, because I’m used to the way that you operate, I can fake I know what you’re trying to get at. But I always try to put on my, you know, consumer hat of, does this make sense to me at first glance, or am I really struggling to figure out what it is. And so I think it’s challenging you, someone like you to break it down to even more simple bite sized data points. And that’s hard for anybody. Data is not easy and communicate with and so I it’s, it’s really it in and of itself, it’s a skill set to be able to do that effectively, every single time,

Christopher Penn

how do you deal with someone who doesn’t adhere to process because the, the data science lifecycle is very, very regimented. If you skip steps, it’s like cooking

for some things, particularly baking, there are steps you absolutely cannot skip, there is no way you can skip letting a dough rise, if you do that, you’re gonna you’re going to make a piece of cardboard. So

not that the data scientists Vanessa do that. But we have seen plenty of cases with with clients in the past where someone just wanted the answer as quickly as possible, even if it wasn’t necessarily a, you know, the right way to do it. How do you navigate something like that you fire them?

Unknown

No,

Katie Robbert

no, you know, in all honesty, I think that there is a time and a place for shortcuts, it’s not very often. And I think that, you know, what you look for in someone who can, who wants to call themselves a data scientist, or even a really good data analyst is someone who’s unwilling to compromise on what the process actually looks like, and making sure that they explore all angles and follow the process correctly, because that way, you have a higher guarantee of getting the answer correct, or providing accurate data. versus if you just look at a look, I got 100 shares on that Twitter post. Well, yeah, that’s what it’s telling you right off the bat. But that might not actually be what’s going on. There might be bots involved, or, you know, it might have been, you know, retweeted a bunch of times, but your stakeholder wasn’t after retweets. They wanted to know, like, how many people actually shared something, it’s not a great example. But basically,

you want someone who is willing to follow the process and can push back and say, I need more time, or I need more information to get you an accurate answer, versus someone who’s willing to just go ahead and take those shortcuts?

Christopher Penn

What about I mean, yes? If it’s, if it’s someone you managed, certainly there’s, there are ways to deal with that. What about when it’s the customer, either internal, like, you know, your, the CMO is saying, hey, I need this tomorrow, or it’s a client or it’s a partner. We have both worked in the agency world and had plenty of times where somebody said, Oh, I need this for a pitch deck for tomorrow. And you’re like, well, that’s a week long project,

how do you navigate that where someone has because they have no understanding of data science. And what they do has either unrealistic expectations or just is a such an incredibly poor plan or themselves that they get themselves into hot water. I

Katie Robbert

mean, first and foremost, I always push back and really try to explain, you can’t have it tomorrow. And but here’s why. Here’s what happens. And so the way that I personally manage that

example is, you know, really trying to help the person understand, okay, we can take a shortcut. But here’s what happens if we do that here are the risks are really sort of outlining what happens when you do that, and the scenario. So really giving them the opportunity to say, Okay, I can live with the risk of the data being incorrect, because I just need something to show tomorrow and having that paper trail to say, I told x person that the data might not be correct, if I deliver it tomorrow. So if it does come back, then you’ve documented that you’ve had that conversation and that the other person who is accepting the data that might not be correct, his said, Yep, I can live with that risk. And for you, as the data scientist or as the data analysts, that’s really the best that you can do. Because you’re always going to face those situations. So just making sure that it’s clearly understood from the beginning, here’s what happens if you don’t let me do my job correctly.

Christopher Penn

Let’s tackle one last thing on data science, because it is a hot topic, particularly in artificial intelligence.

And that is in the data science lifecycle, just like in the software development lifecycle, where does ethics fit in?

Unknown

Um,

Katie Robbert

I think it fits in through everything, every single phase of the life cycle, because you’re always going to have people with different agendas. And so it’s really trying to take all of the information and tell the story in sort of a black and white way. So if, if we take the the easy answer is, it’s when you’re validating and deploying and observing. So if I say this only got 100 retweets, but my cmo was like, Well, can we just make it how do we get it to make it look like it’s 200? You know, again, a really bad example, you know, that sort of a very clear cut? Well, that’s not what the data is saying. So if you can’t manipulate the data to say something, it doesn’t

Unknown

mean you can, you

Unknown

could and that’s the best thing about the FX Right,

Christopher Penn

exactly. And, and we’re seeing we’re seeing the, the Jurassic Park syndrome here in a lot of cases in AI with some one of those. For those of you who don’t remember in the first movie, Dr. Ian Malcolm saying, your scientists were so concerned with whether they could that they never stopped to think whether they should

Unknown

Mm hmm.

Christopher Penn

Alright, so that’s going to wrap up this episode. As always, please subscribe to the YouTube channel to the podcast. If you’re listening on the website that you can find the podcast in iTunes, Google podcasts and wherever fine podcasts are consumed. And we will talk to you next time.